Why 95% of vision projects never launch

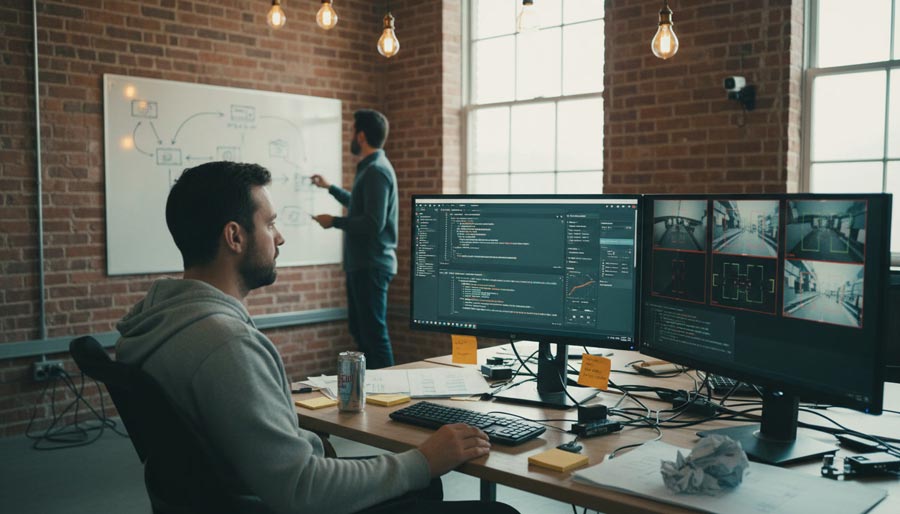

Building computer vision development systems sounds straightforward until reality hits. Companies pour months into models that achieve 98% test accuracy, only to watch them stumble when lighting shifts or camera angles change slightly. The gap between laboratory success and production reliability? Brutal. Around 95% of computer vision projects never reach deployment – not because algorithms lack sophistication, but because teams underestimate everything beyond the model itself.

The disconnect starts early. Business leaders fixate on algorithm development timelines while overlooking critical factors: hardware setup, calibration delays, integration complexities. A 2019 Algorithmia study revealed that among the lucky 22% who successfully productized AI models, deployment required one to three months even with mature MLOps processes. Another 18% needed over three months. Meanwhile, machine learning vision success demands equal attention to data quality, deployment strategy, and continuous iteration – not just fancy neural networks.

Here’s what actually separates projects that ship from those gathering dust in repositories.

Planning beyond the model’s comfort zone

Most computer vision failures originate during planning, where executives set overly ambitious targets without consulting engineering realities. The modeling know-how exists (even for advanced scenarios like autonomous driving), but computing power constraints wreck scaling attempts. Consider Google’s Noisy Student algorithm – it relies on convolutional neural networks with over 480 million parameters. Training that beast demands serious hardware investment, yet many organizations commit to advanced algorithm development then discover their available infrastructure can’t handle proper training or testing.

Smart planning involves examining success stories similar to your business context. Computer vision software development company teams know that data collection services become essential when high-quality labeled datasets aren’t readily available. In healthcare, where computer vision software sees heavy use, annotation accuracy matters tremendously – errors carry serious consequences. The size of required datasets emerged as the biggest limitation in recent surveys, receiving 42.3% of votes, while high costs came second at 20.4%.

But there’s another wrinkle. Leaders often forget extra time needed for setup, configuration, and calibration of cameras and sensors. Combined with integration complexities and testing across real-world scenarios, project timelines shift dramatically from initial estimates. Vision AI implementation isn’t just coding; it’s orchestrating hardware, software, and environmental factors into a cohesive system.

The data dilemma nobody talks about

Computer vision development projects are data-hungry monsters. Models need massive amounts of labeled examples to learn effectively, and acquiring this data proves costly and time-consuming. Public datasets exist for general purposes, but companies in specialized industries struggle – think CT scans in healthcare or rare collision footage for automotive applications. Privacy regulations compound these challenges, while siloed data management systems within organizations make obtaining proprietary data for model enhancement frustratingly difficult.

Interestingly, purpose-built vision AI models are proving highly effective despite smaller datasets. Analysis revealed that 43% of enterprise models were trained with fewer than 1,000 images. With just a few hundred quality images, businesses can develop custom models for specialized challenges, greatly reducing development time and costs. This shift toward efficient, targeted training represents a practical path forward rather than chasing massive dataset requirements.

Deployment environments: cloud, edge, or hybrid?

Where you run your computer vision deployment fundamentally shapes performance, costs, and scalability. Cloud platforms offer rapid scaling ability but introduce latency issues for real-time applications. Local hardware works for testing pilot deployments with idle server resources, though expensive scalability limits growth potential. Edge computing – running models on devices connected to cameras or embedded in equipment – slashes latency for high-definition video transfers but demands more complex architecture and advanced cybersecurity measures.

Consider a farming analytics system monitoring animals across 100 cameras. At 30 images per second per feed, that’s 259.2 million images daily. Sending all that data to the cloud creates bottleneck problems and unexpected cost spikes. The smarter approach? Edge computing vision that analyzes data where it’s generated, communicating only key insights to cloud backends for aggregation and deeper analysis.

Real-time processing requirements particularly favor edge deployments. Manufacturing inspections, autonomous vehicles, traffic monitoring – these applications can’t tolerate cloud round-trip delays. According to market analysis, industries with high visual data volumes see faster computer vision ROI from edge-optimized platforms. One industrial automation case reduced manual inspection teams by 66% (from six to two people), delivering a 20% profit boost and 250% return on capital within two years.

Distribution shift will wreck your careful plans

Models trained on pristine daylight images often fail miserably in low-light conditions. Systems optimized for specific camera angles misbehave when deployed in new environments. This distribution shift problem plagues industries like agriculture and automotive, where lighting, weather, and sensor variations are constant companions. Tesla’s Autopilot faced scrutiny when cameras misinterpreted bright sunlight as yellow traffic lights—a stark reminder that training data rarely captures every real-world scenario.

Solutions involve data augmentation to simulate diverse conditions during training (lighting changes, occlusions, weather variations). Continuously testing models on edge cases and real-world scenarios catches problems before deployment. Partnering with domain experts helps identify potential shifts and refine training datasets. According to MIT research on computer vision robustness, the computer vision market, projected to grow from $20.75 billion in 2025 to $58.33 billion by 2032 at a 15.9% CAGR, increasingly demands robust solutions that handle messy real-world conditions, not just laboratory perfection.

Deployment reality check: what actually breaks

Computer vision deployment encounters change constantly. Environments evolve, sensors age or get replaced, user behavior shifts subtly, new scenarios emerge that weren’t present during training. Construction alters traffic patterns. Seasonal effects change visual appearance. Software updates affect image preprocessing. Without monitoring these changes, performance degradation goes unnoticed until failures become obvious – and by then, tracing the cause proves difficult.

Silent failures pose particular risks in safety-critical applications. A visual AI solutions provider must implement:

- Continuous monitoring: Track model performance metrics in production environments

- Drift detection: Automated systems that flag when input data distributions change

- Feedback loops: Mechanisms to capture edge cases and feed them back into training pipelines

- Version control: Maintain model versioning to roll back when updates introduce problems

Studies show 99% of computer vision project teams experienced significant delays due to various reasons – from data quality issues to integration challenges with legacy equipment. The deployment stage, especially for complex systems running in hybrid cloud environments, requires careful planning and preparation.

Small objects, big headaches

Detecting faces in crowds? Tiny defects on products? Distant vehicles in traffic? Small object detection ranks among the toughest challenges. When you resize images for model input, small objects lose crucial details – a 5×5 pixel face might become a single pixel after downsampling. Multi-scale architectures like Feature Pyramid Networks help by processing images at multiple resolutions, and attention mechanisms can focus models on small, important regions. But honestly? Sometimes you just need higher resolution input and more powerful hardware. There’s no magic bullet.

One practical trick: if you know roughly where small objects appear (like faces typically occupying upper portions of surveillance footage), crop those regions and process them separately at higher resolution. Not elegant, but it works.

Making it work long-term

Success in computer vision development comes from understanding these fundamental challenges and applying proven solutions systematically. Don’t try solving everything at once. Start with data quality, nail your annotations, then tackle specific deployment challenges one at a time. Use transfer learning, monitor production performance, iterate constantly.

The global computer vision market reached $28.40 billion in 2025 and is forecast to climb to $58.60 billion by 2030, reflecting a 16.0% CAGR. Manufacturing leads with 37.5% revenue share, while automotive ADAS applications expand quickest at 21.0% CAGR through 2030. These numbers reflect real business impact from teams who understand that computer vision is 20% model architecture and 80% everything else: data quality, deployment strategy, continuous improvement.

Organizations investing in robust machine learning vision capabilities aren’t just improving model accuracy – they’re building resilience into systems that becomes competitive advantage over time. Teams iterate faster, respond to failures with clarity, deploy models with greater confidence. As computer vision moves into high-stakes, real-world applications, the question isn’t whether data and deployment matter. It’s whether organizations are prepared to manage them with the same care given to models, infrastructure, and product design.

The path from concept to deployment isn’t straightforward, but it’s navigable for teams willing to look beyond algorithm hype and embrace the unglamorous work that actually makes vision systems reliable in production.